The Research Integrity Project

Exploring digital forensic methods to uncover scientific misconduct and flaws.

Despite being funded by different agencies and collaborating with different institutions, this project is also a personal goal, and the content herein should not necessarily represent the official policies or endorsements from any institutions.

Have you ever wondered if peer-reviewed scientific papers might contain errors, or even worse, spread misleading information?

We humans are fallible and sometimes driven by desires that can lead to misconduct. So, yes, scientists can also make mistakes and behave badly. Then, how can we know which scientific claim we should trust? How can we know that the results of a specific paper are valid, reproducible, and trustworthy?

You might be thinking about reproducing every experiment as an answer, but that is not an option – we don’t have unlimited resources. The most simple way is to improve and maintain integrity in science.

By integrity, I refer to the USA science and technology council definition1:

Scientific integrity is the adherence to professional practices, ethical behavior, and honesty and objectivity when conducting, managing, using the results of, and communicating about science and scientific activities. Inclusivity, transparency, andprotection from inappropriate influence are hallmarks of scientific integrity.

From my perspective, scientific integrity is a continuum process acting toward cases of bad science in three very important directions: Prevention, Detection, and Correction.

Prevention should include educating people on do’s and don’ts in publications, and avoiding mistakes or inappropriate behaviors to take place.

For detection, we should be discussing and implementing robust, transparent, and benchmarked systems capable of identifying inappropriate content and behaviors within and across publications.

And, for correction, we should be implementing policies that facilitate paper retraction and correction as soon as we find and report any type of error or misconduct act.

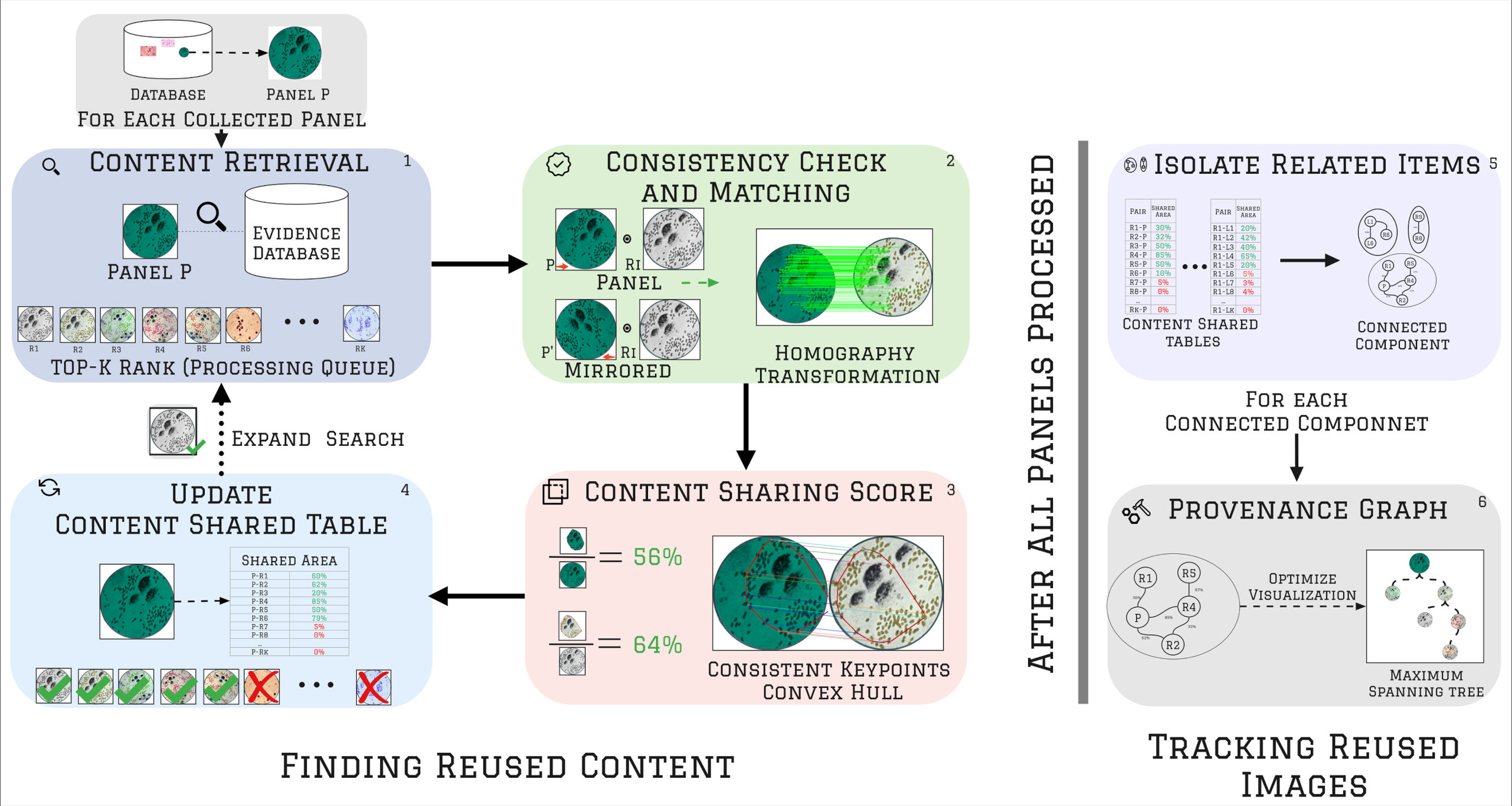

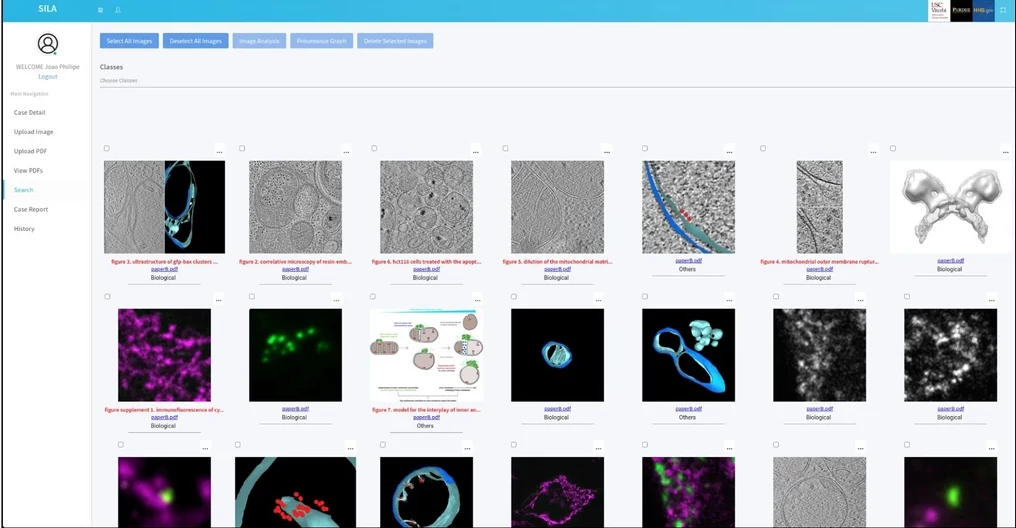

This project focuses on the detection part by applying digital forensics techniques to the scientific integrity context.

Project Motivation

Recently, the number of scientific articles expressing unverified or fake claims has increased, impacting how people believe in science.

A 2022 Dutch Survey2 asked 6,800 researchers about how questionable research practices and misconduct behavior in science. It showed that 50% of the researchers committed at least one questionable act. When asked if they performed falsification or fabrication, about 8% of the researchers admitted doing one of these acts.

Fake scientific claims can be devastating for society and promote unverified or false conspiracy beliefs. We can recall the impact of the work by Wakefield et al.3 linking the MMR vaccine to autism. This work is considered fraudulent4 and took more than ten years to be retracted (1998–2010), damaging society until today by anti-vaccine ideas spread by documentaries and social media influencers5, reaching more than 7 million people only in the U.S..6

To better understand the trending number of reported cases in science, we can refer to the number of paper retractions according to the Retraction Watch Database. By plotting the number of retractions per year, we can see an increasing trend in retractions, which could be attributed to honest errors or intentional unethical acts.

Problematic and inappropriate scientific images have also been at the epicenter of the problem. After screening more than 20,000 scientific papers from different journals looking for possible image duplication, Bik et al.7 found that about 4% have inappropriate duplications, of which half have clear evidence of deliberated misconduct. More recently, using automated tools, researchers found even higher rates of image reuse as in Bucci with 6%8 and Acuna et al. 9 with 9%.

Check the trend of retracted articles due to problematic images below.

Inappropriate behavior are present on any type of venue from predatory journals to top leading and prestigious ones. For example, this highly cited ariticle10 ( with more than 2,000 citations) presents an intriguing case of image manipulation, in which the authors retracted the paper after 10 year due to signs of excessive image edit11.

Furthermore, illicit organizations known as paper mills are producing fake articles on a large scale 12. This industry sells publications, authorship, and fake article experiments to clients willing to insert their names in whatever paper they can, aiming to inflate their citation scores and receive potential bonifications from their institutions and government due to a higher number of published papers.

Project Goal

This project investigates different integrity problems in science, aiming to design and develop digital forensic solutions for their detection.

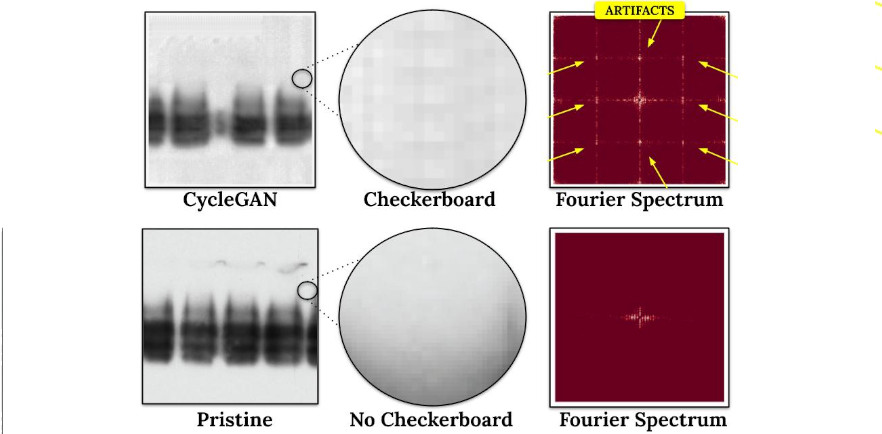

Currently, the project focus on checking image manipulation and reuse, and detecting AI-generated images that mislead science. These tasks include addressing errors and misconduct ranging from simple duplicated images mistakenly added to a paper or identifying publications produced by paper mills.

As the project progresses, different forms of scientific manipulation will be discovered, which will also be investigated and addressed by the development of new detectors. The active tasks will soon expand to encompass text, statistical data, and metadata analysis to check for potential errors or unethical behaviors in these modalities. This project goal is to significantly improve the integrity of scientific publications and foster greater trust in research findings.

Talks

Project Publications

References

Guidance by the Scientific Integrity Framework Interagency Working Group of the National Science and Technology Council “A Framework for Federal Scientific Integrity Policy and Practice.” January 12, 2023. Available at this Link (Accessed June 2024). ↩︎

Gopalakrishna G, et al. (2022) Prevalence of questionable research practices, research misconduct and their potential explanatory factors: A survey among academic researchers in The Netherlands. PLoS ONE 17(2): e0263023. https://doi.org/10.1371/journal.pone.0263023 ↩︎

Wakefield, et al. RETRACTED: Ileal-lymphoid-nodular hyperplasia, non-specific colitis, and pervasive developmental disorder in children. The Lancet, 351(9103):637–641, February 1998. ↩︎

F. Godlee et al.. Wakefield’s article linking MMR vaccine and autism was fraudulent. BMJ, 342(jan05 1):c7452–c7452, January 2011 ↩︎

Amanda S. Bradshaw, et al. Propagandizing anti-vaccination: Analysis of vaccines revealed documentary series. Vaccine, 38(8):2058–2069, February 2020. ↩︎

Tara C Smith. Vaccine rejection and hesitancy: A review and call to action. Open Forum Infectious Diseases, 4(3), 2017. ↩︎

Bik et al.. The prevalence of inappropriate image duplication in biomedical research publications. mBio, 7(3), July 2016. ↩︎

Bucci. Automatic detection of image manipulations in the biomedical literature. Cell Death & Disease, 9(3), March 2018. ↩︎

Daniel E. Acuna, Paul S. Brookes, and Konrad P. Kording. Bioscience-scale automated detection of figure element reuse. bioRxiv, February 2018. ↩︎

Lesné, S., Koh, M., Kotilinek, L. et al. RETRACTED ARTICLE: A specific amyloid-β protein assembly in the brain impairs memory. Nature 440, 352–357 (2006). https://doi.org/10.1038/nature04533 ↩︎

Lesné, S., Koh, M.T., Kotilinek, L. et al. Retraction Note: A specific amyloid-β protein assembly in the brain impairs memory. Nature 631, 240 (2024). https://doi.org/10.1038/s41586-024-07691-8 ↩︎

Holly Else and Richard Van Noorden. The fight against fake-paper factories that churn out sham science. Nature, 591(7851):516–519, March 2021. ↩︎