Explainable Artifacts for Synthetic Western Blot Source Attribution

1Universidade Estadual de Campinas, Campinas, São Paulo, Brazil, 2Politecnico di Milano, Italy, 3Loyola University Chicago, Chicago, IL, USA, 4Purdue University, West Lafayette, IN, USA

Abstract

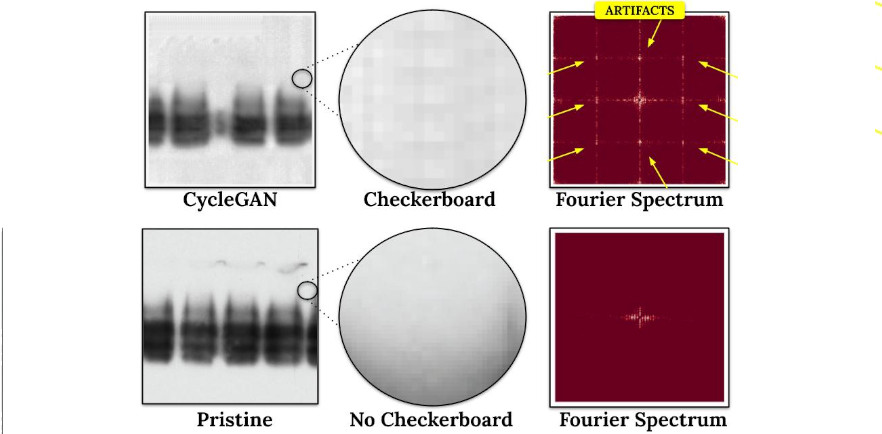

Recent advancements in artificial intelligence have enabled generative models to produce synthetic scientific images that are indistinguishable from pristine ones, posing a challenge even for expert scientists habituated to working with such content. When exploited by organizations known as paper mills, which systematically generate fraudulent articles, these technologies can significantly contribute to the spread of misinformation about ungrounded science, potentially undermining trust in scientific research. While previous studies have explored black-box solutions, such as Convolutional Neural Networks, for identifying synthetic content, only some have addressed the challenge of generalizing across different models and providing insight into the artifacts in synthetic images that inform the detection process. This study aims to identify explainable artifacts generated by state-of-the-art generative models (e.g., Generative Adversarial Networks and Diffusion Models) and leverage them for open-set identification and source attribution (i.e., pointing to the model that created the image).

For further details, please refer to the full publication in IEEE Explore or the Arxiv Version.

Citation

Cardenuto, J.P. et al. (2024) Explainable Artifacts for Synthetic Western Blot Source Attribution. 2024 IEEE International Workshop on Information Forensics and Security (WIFS), Rome, Italy, 2024, DOI: 10.1109/WIFS61860.2024.10810680

1

2

3

4

5

6

7

8

9

@inproceedings{cardenuto2024explainable,

author={Cardenuto, João P. and Mandelli, Sara and Moreira, Daniel and Bestagini, Paolo and Delp, Edward and Rocha, Anderson},

booktitle={2024 IEEE International Workshop on Information Forensics and Security (WIFS)},

title={Explainable Artifacts for Synthetic Western Blot Source Attribution},

year={2024},

pages={1-6},

keywords={Forensics;Conferences;Paper mills;Closed box;Organizations;Generative adversarial networks;Diffusion models;Security;Convolutional neural networks;Fake news;Western blots;synthetically generated images;image forensics;source attribution;scientific integrity},

doi={10.1109/WIFS61860.2024.10810680}

}

Code and Data: GitHub Repository